Social media is constantly evolving. Words and images that seem harmless today can be interpreted differently later.

This places a responsibility on brands to constantly evolve their content moderation strategies to be in sync with what is acceptable in society. They need to find the right balance between free speech and harmful speech.

Striking this balance can be tricky. That’s why we’ve written this guide on content moderation to help you know how far is too far when it comes to content moderation. In this guide, we talk about content moderation, the challenges of the social media age, and best practices to ensure you get the best out of your moderation efforts.

Let’s get right into it.

- What is content moderation?

- What is the difference between content moderation and content censorship

- What are the benefits of content moderation?

- Why is content moderation important for user-generated campaigns?

- 5 types of content moderation

- What are the challenges of content moderation?

- Content moderation strategies for social media

- Do’s and don’ts of content moderation

- Content moderation tools and solutions

- 6 best content moderation practices

- What is the future of content moderation?

What is content moderation?

Content moderation refers to the practice of monitoring, reviewing, and editing user-generated content on digital platforms to ensure that it complies with the platform’s guidelines and policies.

It is usually done to prevent harmful or offensive content from being posted while promoting constructive and respectful discourse. In the age of social media, where users have more freedom to share and express themselves, content moderation is not negotiable.

This is why social media platforms have rules and regulations for what constitutes appropriate content. Content that violates these rules can include hate speech, cyberbullying, sexually explicit content, spam, and misinformation. Moderators review and remove content that violates these guidelines and policies to maintain a safe and respectful online community for all users.

How is content moderation done?

Content moderation is typically done through a combination of automated tools and human moderators. Automated tools, such as AI and machine learning, can help flag potential violations, while human moderators review flagged content and make final decisions on whether to remove or allow it based on established guidelines and policies. Moderators may also provide feedback to users and take action against accounts that repeatedly violate the platform’s rules.

Examples of content that may require moderation include:

- Hate speech or discriminatory content

- Graphic or violent content

- Nudity or sexual content

- Spam or phishing attempts

- Intellectual property infringement

- False information or misinformation

- Personal attacks or harassment

What is the difference between content moderation and content censorship

Content moderation and censorship are often used interchangeably, but they refer to different practices. Content moderation involves monitoring and reviewing user-generated content to ensure it complies with established guidelines and policies. It is typically done to promote responsible and respectful discourse while preventing harmful content from being posted.

In contrast, content censorship involves intentionally suppressing or prohibiting certain types of content, often due to political or ideological reasons. Content censorship is generally seen as violating free speech principles, while content moderation is necessary to maintain a safe and positive online community.

What are the benefits of content moderation?

- Protecting brand reputation: Imagine having extremely negative reviews or spam comments on your brand’s page. The ripple effect of such on your sales and brand image cannot be over-emphasized. So it’s important to have a system that ensures that inappropriate or harmful content is removed from the platform. By removing such content, your brand can maintain a positive image and avoid negative associations with inappropriate or harmful content.

- Building customer trust: Customers pay attention to your every move on social media. Every encounter with your brand’s page or content is either helping them build trust or otherwise. By moderating content on your social media platforms, you can help build customer trust by demonstrating that your brand is committed to maintaining a safe and welcoming environment on your social media pages. Showing your customers that you take customer concerns seriously and are willing to take action to address them.

- Improving customer engagement: Content moderation can help to improve customer engagement by ensuring that conversations and discussions on social media remain relevant and on-topic. By removing irrelevant or off-topic content, a brand can ensure that its social media channels are a valuable resource for customers seeking information about its products or services.

- Reducing legal risks: Content moderation can also reduce legal risks for a brand by ensuring that all content posted on social media channels complies with legal requirements and community guidelines. Removing content that violates these guidelines can help a brand avoid legal action and protect its reputation.

Why is content moderation important for user-generated campaigns?

Content moderation is necessary for user-generated campaigns because it ensures that the content being created aligns with the campaign goals and values. Moderation helps to prevent the spread of harmful or inappropriate content that could damage the campaign’s reputation or harm users.

By promoting responsible and respectful discourse, content moderation can create a positive and engaging community around the campaign. It can also help to prevent legal issues that may arise from breaking hate speech or intellectual property laws.

🎉 Related Article: User-generated content 101: An ultimate guide for UGC

5 types of content moderation

1. Pre Moderation

Pre-moderation involves reviewing and approving all user-generated content before it’s published on the platform. This can include text, images, and videos. Moderators check the content against the platform’s community guidelines or terms of service and ensure it doesn’t violate any rules.

The main advantage of pre-moderation is that it allows platforms to control the content that is published on their site and to prevent any content that violates their community guidelines or terms of service from being displayed. However, this approach can be time-consuming and may cause delays in content publication.

2. Post Moderation

Post-moderation is a content moderation technique where user-generated content is first published on the platform and then reviewed and moderated afterward. Unlike pre-moderation, which involves reviewing content before it is published, post-moderation allows users to post content in real time and removes any content that violates the platform’s community guidelines or terms of service.

The main advantage of post-moderation is that it allows for fast and easy content publication without delays. However, it can also result in inappropriate or harmful content being displayed on the platform before removing it, which can be a risk for users.

3. Reactive Moderation

Reactive moderation involves responding to user complaints or reports of inappropriate or harmful content on a platform. Instead of proactively searching for inappropriate content, moderators only take action when they receive a complaint or report from a user.

The main advantage of reactive moderation is that it can be less resource-intensive than proactive moderation because moderators only have to review and take action on content reported by users. However, it may also result in inappropriate or harmful content remaining on the platform until a user reports it.

4. Automated Moderation

Automated moderation uses software algorithms, machine learning, and artificial intelligence (AI) to detect and remove inappropriate or harmful content from a platform. Automated moderation can be used with pre- or post-moderation or as a standalone technique.

The main advantage of automated moderation is that it can quickly and efficiently moderate large volumes of user-generated content. However, automated moderation may not be as accurate as human moderation, and there is a risk that it may mistakenly flag or remove content that does not violate the platform’s guidelines.

5. Distributed Moderation

Distributed moderation is a type of content moderation that involves a community of users, rather than a centralized moderation team or algorithm, to police and enforce platform rules. The idea behind distributed moderation is that a large group of users can collectively maintain the quality and safety of the platform by reporting inappropriate content and taking action against violators.

Users who repeatedly flag inappropriate content and have a history of accurate flagging may be granted more authority and responsibility, such as the ability to hide or remove content or approve or reject new content.

One key advantage of distributed moderation is that it can scale to large communities without requiring a large centralized moderation team. It can also help to reduce bias and improve the fairness of moderation decisions, as the community as a whole can weigh in on moderation decisions rather than relying on the judgment of a single person or algorithm.

Most social media platforms combine various moderation methods to provide a comprehensive solution. For example, a platform might use automated moderation to detect and remove spam and other malicious activities, reactive moderation to respond to user reports of inappropriate content, and proactive moderation to prevent harmful content from being published.

What are the challenges of content moderation?

- The volume of content: The sheer volume of content on social media platforms can be overwhelming for brands to moderate. Even with dedicated teams, it can be difficult to keep up with the constant stream of posts, comments, and messages. This can result in inappropriate or harmful content going unchecked and potentially damaging the brand’s reputation.

- Time-sensitive nature of social media: Social media is a real-time platform, with content being created and shared at a rapid pace. Brands need to be able to monitor and respond to content quickly to avoid negative feedback or a crisis. This requires dedicated resources and tools to ensure that the brand is able to stay on top of social media activity.

- Context: Moderating content on social media platforms requires a deep understanding of the cultural, political, and social context in which the content is posted. This can be challenging for moderators, especially if they are unfamiliar with the nuances of different communities and cultures. This also poses a challenge to the use of AI tools for moderation. Depending on the context in which some words or images are used, these tools can struggle to interpret harmful content.

- Balancing freedom of speech and brand reputation: Content moderation can be a tricky balancing act between protecting the brand’s reputation and respecting users’ freedom of expression. Brands need to have clear guidelines in place to ensure that they are moderating content fairly and consistently. They also need to be aware of local laws and regulations around free speech, particularly if they operate in multiple countries.

- Maintaining consistency: Consistency is key in content moderation, but it can be challenging to maintain consistency across multiple platforms and regions. Brands must ensure that their content moderation guidelines are clear and consistent and that all team members are trained to follow these guidelines. For example, a brand may have different guidelines for moderating content in the US versus Europe. Still, these guidelines must be consistent and aligned with the brand’s overall values.

- Staying up-to-date on regulations and policies: Social media regulations and policies are constantly evolving, and brands must stay up-to-date on these changes to ensure they are moderating content appropriately. Failure to comply with regulations can result in fines or legal action, damaging a brand’s reputation. They must be proactive in keeping up with changes to regulations and policies and ensuring that their content moderation practices align with these changes.

Content moderation strategies for social media

Establish clear community guidelines

Brands should establish clear and concise community guidelines that outline what types of content are allowed on their page and what types of content are prohibited. These guidelines should be easily accessible to all, preferably located in a prominent location on your page, and should be regularly updated to reflect changes in user behaviour and platform policies. Clear guidelines can set expectations for how users interact with your brand on social media.

When creating community guidelines, it’s important for brands to be transparent about their expectations and to ensure that the guidelines align with their brand values and mission. Some common guidelines include:

- No hate speech or discrimination

- No spamming or self-promotion

- Respectful language and behaviour

- No illegal or inappropriate content

By establishing clear community guidelines, brands can create a positive and welcoming online community for their followers. This can also help to prevent issues related to inappropriate content or behaviour on social media.

For example, if a brand sees a user posting inappropriate content or engaging in negative behaviour, they can reference their community guidelines and take appropriate action, such as removing the post or banning the user from their channel.

🎉 Related Article: Social media security guideline: The ultimate guideline to make your social media accounts safe and avoid hacking

Implement a crisis management plan

A crisis management plan is a set of procedures and protocols that a brand can use to respond to unexpected events or crises that could impact its reputation or business operations.

The goal of a crisis management plan is to minimize the impact of a crisis on a brand’s reputation and to ensure that the brand can communicate effectively with its stakeholders, such as customers, employees, and investors. A crisis management plan typically includes the following steps:

- Identify potential crises: Brands should identify crises that could impact their business operations or reputation. For example, a product recall, a data breach, or a negative news story could all be considered potential crises.

- Develop response procedures: Brands should develop response procedures that outline the steps they will take in the event of a crisis. This might include designating a crisis management team, developing key messages, and determining how the brand will communicate with stakeholders.

- Test the plan: Brands should test their crisis management plan to ensure that it is effective and to identify any gaps or areas for improvement. This might include conducting crisis simulations or tabletop exercises.

- Respond to the crisis: If a crisis does occur, brands should follow their crisis management plan and take appropriate action to address the situation. This might include issuing a statement, communicating with stakeholders, or taking steps to mitigate the impact of the crisis.

By implementing a crisis management plan, brands can be better prepared to respond to unexpected events and protect their reputation on social media. For example, if a brand experiences a data breach, they can use their crisis management plan to quickly communicate with their customers and stakeholders and take steps to mitigate the breach’s impact.

Hire and train human moderators

Human moderators can provide a human touch to content moderation, helping to identify content that may be inappropriate or harmful. Platforms can invest in hiring and training moderators to ensure they have the skills and knowledge to moderate content effectively.

They can also provide valuable context and nuance to content moderation decisions, as they can consider the cultural, political, and social context in which the content was posted. However, this type of moderation can be expensive, and brands may struggle to hire and train enough moderators to moderate all content effectively.

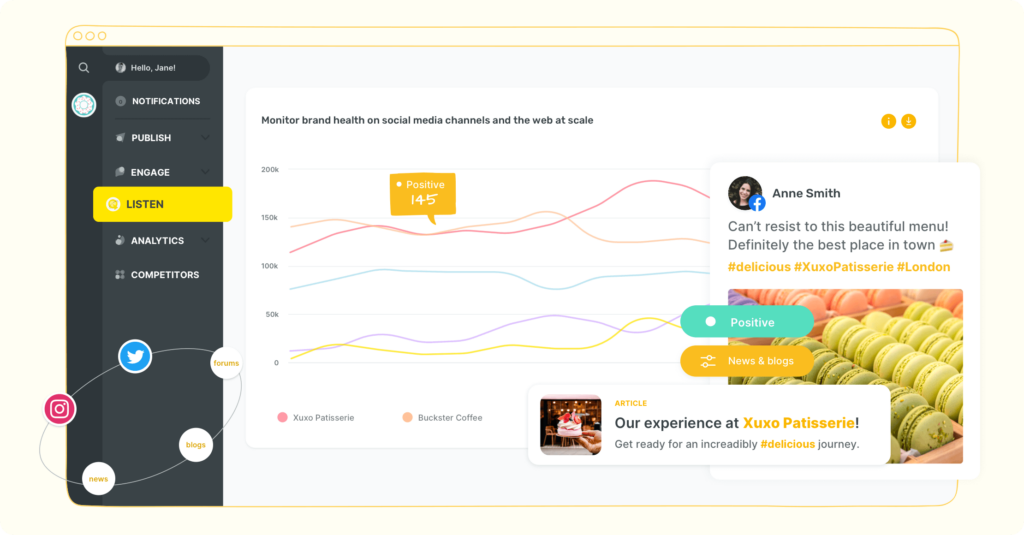

Use social listening tools

Social listening tools can help brands monitor user feedback and complaints, as well as identify trends and issues that may be affecting their community. This can be valuable for identifying potential issues before they escalate.

These tools work by using algorithms to scan online content and identify keywords and phrases that are relevant to the brand. They can then provide reports that summarize the data, such as the number of mentions of the brand, the sentiment of the comments (positive, negative, or neutral), and the geographic location of the users.

Using social listening tools, brands can gain valuable insights into their online community and identify potential issues before they become major problems. For example, if a brand sees a sudden increase in negative comments or complaints about a specific product or service, it can use this information to address the issue and prevent it from escalating.

In addition to identifying potential issues, social listening tools can also be used to track user sentiment and identify trends in customer preferences. This can be valuable for developing new products or services, improving customer service, and refining marketing strategies.

Some examples of social listening tools include Sociality.io, Hootsuite Insights, Mention, Brandwatch, and Sprout Social. These tools offer a variety of features, such as sentiment analysis, keyword tracking, and competitive analysis, and can be customized to meet a brand’s specific needs.

Do’s and don’ts of content moderation

Do’s

- Establish clear and concise community guidelines that outline what types of content are allowed and what types are prohibited.

- Train moderators to understand platform policies and apply them consistently.

- Use automated moderation tools to help identify and remove inappropriate content.

- Encourage users to report inappropriate content using easy-to-use reporting tools.

- Prioritize the safety and well-being of users when making moderation decisions.

- Stay up-to-date with legal requirements and changes in user behaviour to ensure that moderation policies remain effective.

- Communicate moderation decisions and policies to users in a transparent and timely manner.

- Continuously monitor and evaluate moderation practices to identify areas for improvement.

Don’ts:

- Ignore reports of inappropriate content or fail to act on them on time.

- Implement moderation policies that are overly restrictive or inconsistent.

- Use automated moderation tools as a substitute for human moderators without proper oversight and review.

- Over-rely on user reporting without considering the context and motivations behind reports.

- Allow personal biases or opinions to influence moderation decisions.

- Use moderation as a way to suppress free speech or silence dissenting opinions.

- Use moderation to retaliate against users who have criticized your brand.

- Implement moderation policies that violate users’ privacy or data protection rights.

It’s important to note that the specific do’s and don’ts of content moderation may vary depending on the platform, its user base, and the types of content being moderated. Brands should strive to establish policies and practices that are tailored to their unique circumstances and that prioritize the safety and well-being of their audience.

Content moderation tools and solutions

1. Sociality.io

Sociality.io is a social media management tool that offers moderation features for brands. With Sociality.io, you can monitor your social media channels for mentions of your brand or industry and filter out unwanted content. You can use Sociality.io’s Smart Inbox to see all your social media messages in one place and respond to them quickly. In addition, the analytics and reporting features can help you measure the impact of your social media efforts.

It also offers a unique feature called “Sentiment Analysis,” a machine learning technology that can help you identify the sentiment (positive, negative, or neutral) of social media conversations about your brand or industry. This can help you understand how your brand is perceived online and identify potential issues or opportunities.

Overall, Sociality.io is a powerful tool for brands looking to monitor and manage their social media presence. It can be especially useful for brands looking to understand the sentiment of online conversations about their brand.

2. Respondolgy

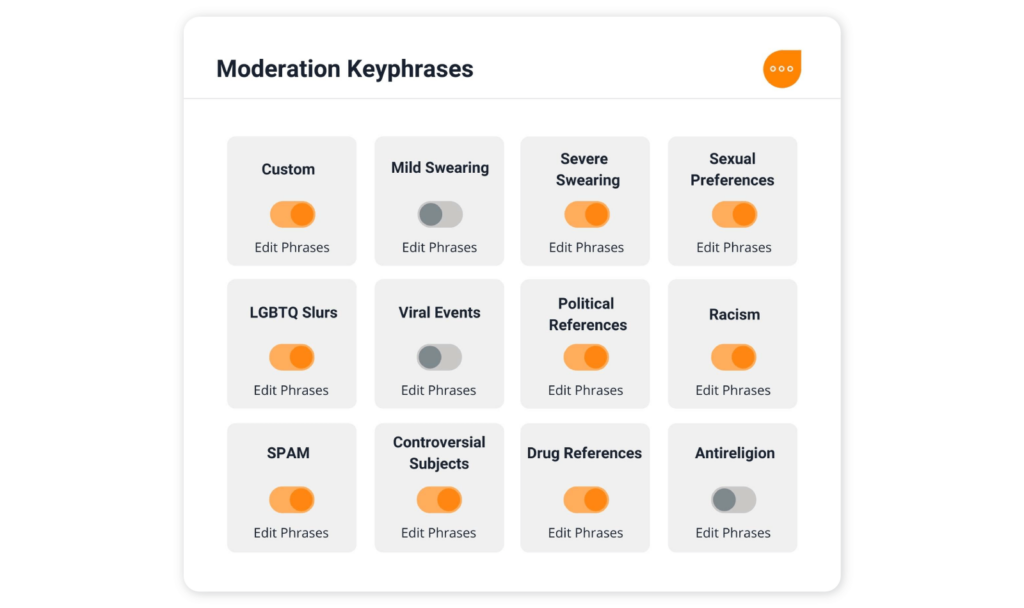

Respondology is a content moderation tool that allows brands to monitor and moderate social media content across various platforms. With Respondology, brands can track keywords, hashtags, and mentions related to their brand or industry and moderate any inappropriate or harmful content that appears.

This tool uses artificial intelligence and human moderation to filter out unwanted content and ensure that brands can maintain a positive online reputation. It allows brands to set up customized moderation rules, so they can be notified in real time of any content that violates their policies or values. They can then approve or deny the content and respond to the user accordingly.

3. BrandBastion

BrandBastion is a content moderation solution that uses a combination of artificial intelligence and human moderation to monitor social media channels for harmful or inappropriate content.

Brands can set up customized moderation rules and receive real-time alerts when any content violates their policies or values. The platform also offers sentiment analysis and reporting features, which can help brands identify trends in user feedback and improve their overall social media strategy.

4. Two Hat

Two Hat is a content moderation solution that uses machine learning and artificial intelligence to analyze user-generated content and flag potentially harmful or inappropriate content.

The platform can be customized to match each brand’s specific needs and can be used to moderate various types of user-generated content, including text, images, and videos. Two Hat offers a range of features, including real-time moderation, automated workflows, and customizable rules.

5. CleanSpeak

CleanSpeak is a content moderation tool that uses a combination of automated and manual moderation to filter out harmful or inappropriate content.

The platform offers a range of features, including profanity filtering, image moderation, and spam detection. It can moderate user-generated content across various channels, including social media, chat, and forums. CleanSpeak also offers integrations with popular content management systems, such as WordPress and Drupal.

6 best content moderation practices

1. Define your brand voice and values

Before you start posting on social media, it’s important to define your brand voice and values. Your brand voice is the tone and style used in your social media posts, while your brand values are the principles and beliefs that guide your brand’s actions. By defining your brand voice and values, you can ensure your social media posts are consistent with your brand identity.

When you are consistent with your brand voice in all your content, your audience becomes aware of your brand voice and values. This would ultimately reduce their use of unacceptable language while interacting with your brand and your content.

2. Clearly define community guidelines

Community guidelines should clearly define acceptable and unacceptable behaviour on a platform. These guidelines should be easy to understand and enforced consistently. Brands should establish guidelines that reflect their values and mission and outline what kind of content is not allowed, such as hate speech, harassment, or spam.

Creating community guidelines helps ensure your social media channels remain positive, safe, and respectful. Guidelines should cover topics like language, tone, behaviour, and the types of content allowed. By outlining clear expectations, you can help prevent negative comments, spam, or other content that doesn’t align with your brand values. You can also outline the consequences of violating your community guidelines, which can help deter inappropriate behaviour.

3. Use a variety of moderation techniques

Brands should employ a range of moderation techniques to address different types of content moderation challenges. Automated moderation, reactive moderation, and pre-and post-moderation are all effective techniques that can be used alone or in combination to achieve the desired level of moderation.

4. Respond quickly to user concerns

Brands should respond to user concerns, whether they are reporting inappropriate content or seeking assistance with an issue related to moderation, promptly. A quick response can help prevent issues from escalating and show users that the brand takes moderation seriously.

5. Continuously evaluate and improve moderation efforts

Brands should continuously evaluate the effectiveness of their moderation efforts and make adjustments as needed. This includes regularly reviewing and updating community guidelines and policies, as well as tracking and analyzing moderation metrics to identify areas for improvement.

6. Use automation tools to filter content

There are several automation tools available that can help you filter out spam, irrelevant content, or inappropriate comments. For example, you can use tools like Sociality.io to automatically filter out posts that contain certain keywords, phrases, or hashtags. You can also use tools like Google Alerts to monitor for mentions of your brand across the web. By leveraging automation tools, you can save time and ensure your social media channels are free from unwanted content.

What is the future of content moderation?

The future of content moderation on social media is likely to involve a combination of human moderators and AI-powered tools. As technology advances, AI tools will become more sophisticated at identifying and removing harmful content. However, human moderators will still be needed to provide context-specific judgment and decision-making.

Platforms must continue to update their policies and guidelines to address evolving forms of harmful content. Those social media platforms that are able to balance privacy, freedom of expression, and safety will be the most successful in the long run.